Home Assistant: Exploring location history

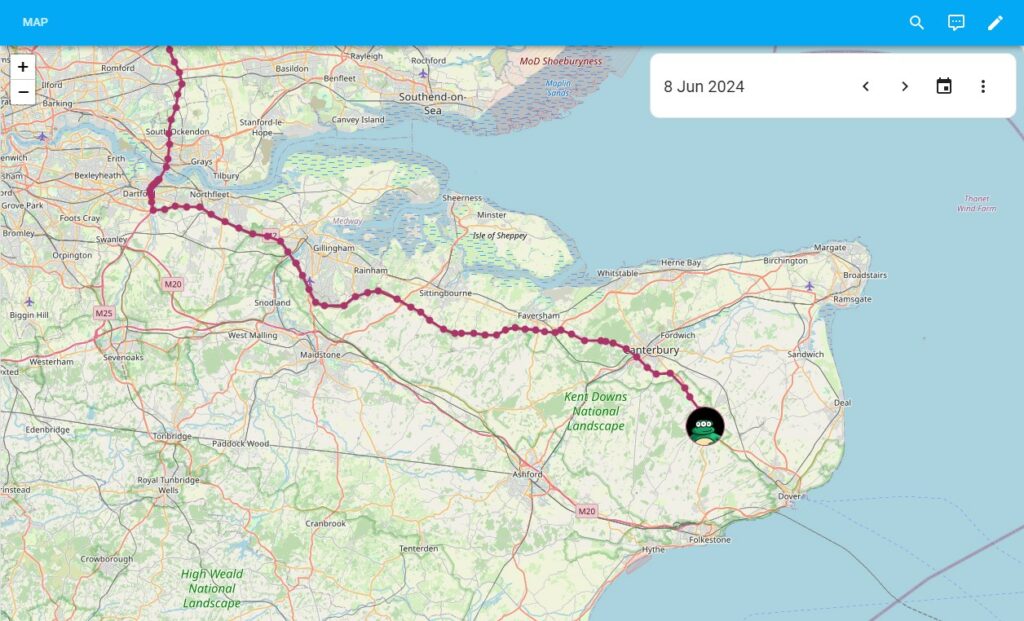

If you’ve managed to set up a good source of location data in Home Assistant, then there is a good chance you will have tried and been a bit disappointed by the out of the box MapCard. While it can get your trackers showing on a map, when it comes with digging into the history data the functionality is painfully limited.

As a big user the rich capabilities available in other areas of Home Assistant, such as the energy dashboards, I set out to find a way of getting similar capabilities for location data on maps as well.

One of the great things with Home Assistant, and Open source projects in general, is that when you do find a gap like this you have the chance to get involved and solve your own itch. As someone with very limited experience with Home Assistants frontend codebase, I decided my best bet was find someone who’d created a HACS Map Card already, and see if I could use that as the basis to build out my Wishlist

As luck would have it @nathan_gs‘s ha-map-card perfectly fitted the bill.

Rather than jumping straight into the deep end with and trying to make the date picker work, I initially started off picking up some more standard features and bugs from the Github issues. I find this is a good way to get a feel for a new codebase and its conventions. The use of web components and the Lit library were all new to me, so despite my best efforts I did have to read through a little documentation as well.

Fortunately, Nathan turned out to be incredibly receptive to the random features I started throwing his way, which is always a huge encouragement when you start tinkering with something new.

Getting date ranges working

Once I felt I had a basic handle on the codebase, I decided to go after my main wish list feature. Integrating with Home assistants built in energy-date-selection component. The component itself is great, providing the ability to easily navigate history, select date ranges and more. Right now its very much tied to Home Assistants energy dashboard – although I hope at some point in the future Home Assistant choose to make it available as a more general feature.

Despite it being meant for the energy dashboards, as the screenshot above shows, it turns out it can indeed be used to drive other features. Most notably the map history.

I knew setting out that hooking into the date selection card must be possible as I already used a number of Home Assistant Cards that do so, the awesome ha-sankey-chart for one. Inspired by the Sankey Chart Card approach, I followed the model to create a “DateRangeService” that would take responsibility for waiting for the Home Assistant _energy connection to be ready, then manage the subscription to it once it was. I was then able to use the service to register call-backs in the card, allowing each entity to trigger a redraw of history data whenever the date range was changed.

Following on from the initial prototype, I ended up cranking out a number of minor features for the Map Card over the last week or two, further exploring some of home assistants internal capabilities.

Highlights include

- The ability to have card level history date ranges and optionally override these individually for each entity.

- The ability to use external entities to provide history date range values (For example an

input_numberto drive the hours or days ago.) This provides an alternative mechanism for exploring the history data. You can even mix and match them. - Adding more detail to the tooltips and entities themselves, including handling the click events and triggering home-assistant modals.

In Summary

Although still fairly new, I think the ha-map-card represents a pretty monumental improvement to home-assistants mapping and location data exploration capabilities. It provides significantly greater control over how location information is used and displayed, as well as several other advanced features including the ability to configure custom tile layers and web mapping services.

For people looking to get more location data into tehri Home Assistant, if you are a google maps user I’d recommend installing pnbruckner/ha-google-maps via HACs as a nicer way to setup access to your location history.

If you would like to set up a page similar to my screenshot does, you’ll need to install card-mod (to add a little extra CSS) then setup a new tab with the “Panel (1 Card view)”.

My YAML for the card then looks roughly as follows

type: grid

square: false

columns: 1

cards:

- type: energy-date-selection

card_mod:

style: |

ha-card {

z-index: 999999999;

position: absolute;

height: 80px;

width: 80%;

background: #fff;

top: 75px;

right: 10px;

max-width: 450px;

}

- type: custom:map-card

history_date_selection: true

zoom: 10

card_size: 16

entities:

- entity: device_tracker.your_entity_1

- entity: device_tracker.your_entity_2

- entity: device_tracker.your_entity_3

- entity: device_tracker.your_entity_4

...

Not perfect, but hopefully a nice starting point for a more easily explorable map.

Final thoughts

Having now worked on this and another simple card, I have to say I’ve quite enjoyed the experience of developing in the Home Assistant ecosystem.

That said, the learning curve was somewhat steeper than I expected, simply due to the difficulty in finding any definitive docs on how to go about most things. While there is a boilerplate card and a lot of info filed away in forum posts, I ended up spending the majority of my time reading the source of other cards in order work out how achieved things. Looking back, a lot of it now seems pretty obvious, but that was definitely not the case when I first started poking around.

Some of this may well just be me being rusty, I’ve primarily been an API developer the last few years, so my familiarly with things like Lit and TypeScript were minimal. (Although I did manage to sidestep the Typescript for now at least).

It did also seems a bit odd that so far as I can tell, there is no proper way to ask Home Assistant to load built in card components without needing to have Home Assistants own card on the page to do so. As a result you end up having to reinvent wheels, despite there being a perfectly good component in Home Assistant already.

All that said, after a bit of effort I managed to get my head around the basics and build out some features I’ve been desperate for for ages. You can’t really ask for anything more than that.